What is LLM Observability?

LLM Observability provides deep visibility into your AI applications—understanding not just what happened, but why and how to improve.

Definition

LLM Observability is the practice of monitoring, tracing, and analyzing Large Language Model applications to ensure quality, performance, and cost-effectiveness in production.

Why It Matters

Raw observability data is useless without evaluation. Agents generate 1000+ traces daily - impossible to review manually. You need AI-powered eval to pinpoint exact errors with reasoning.

AI-Specific Metrics

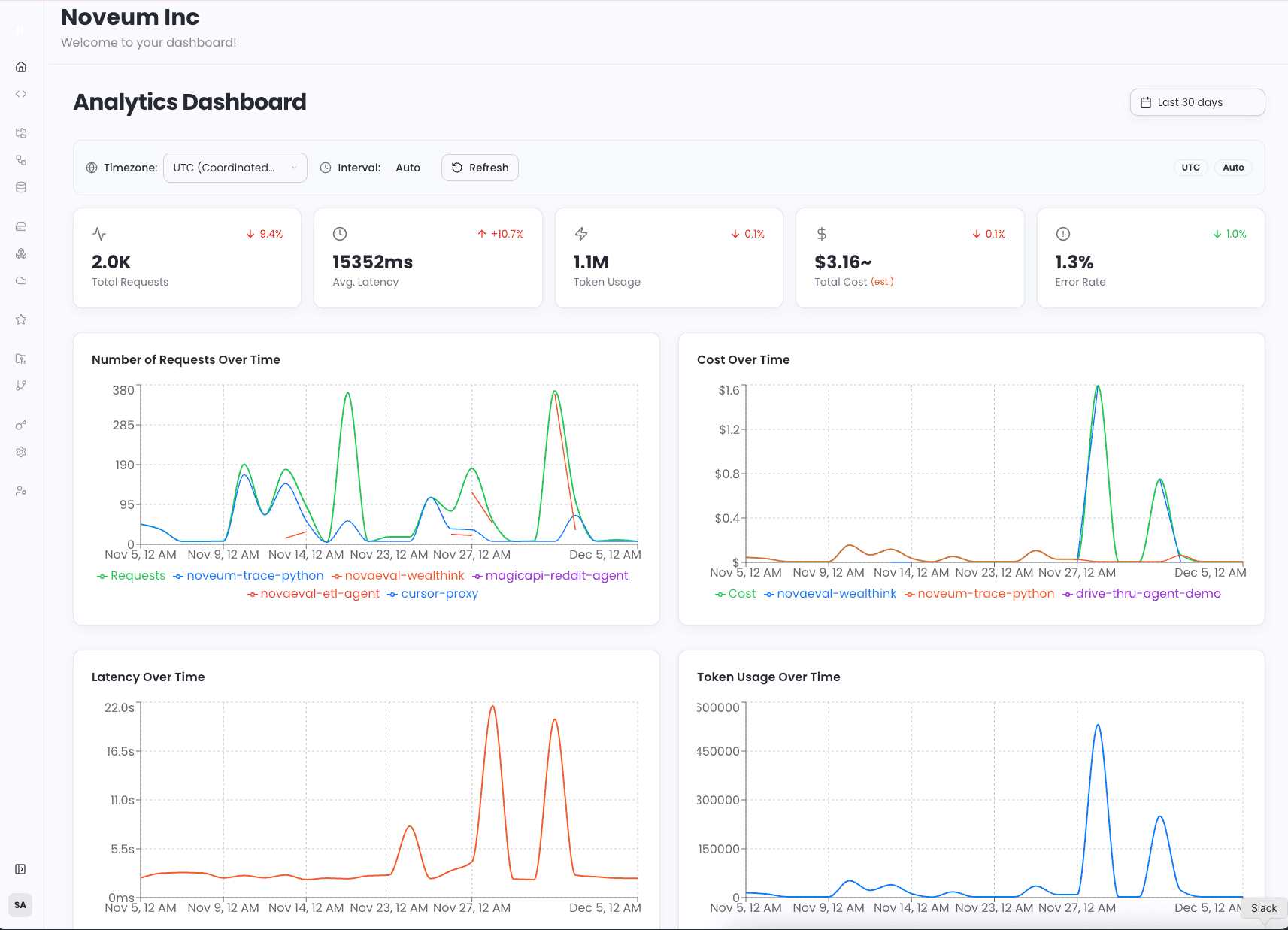

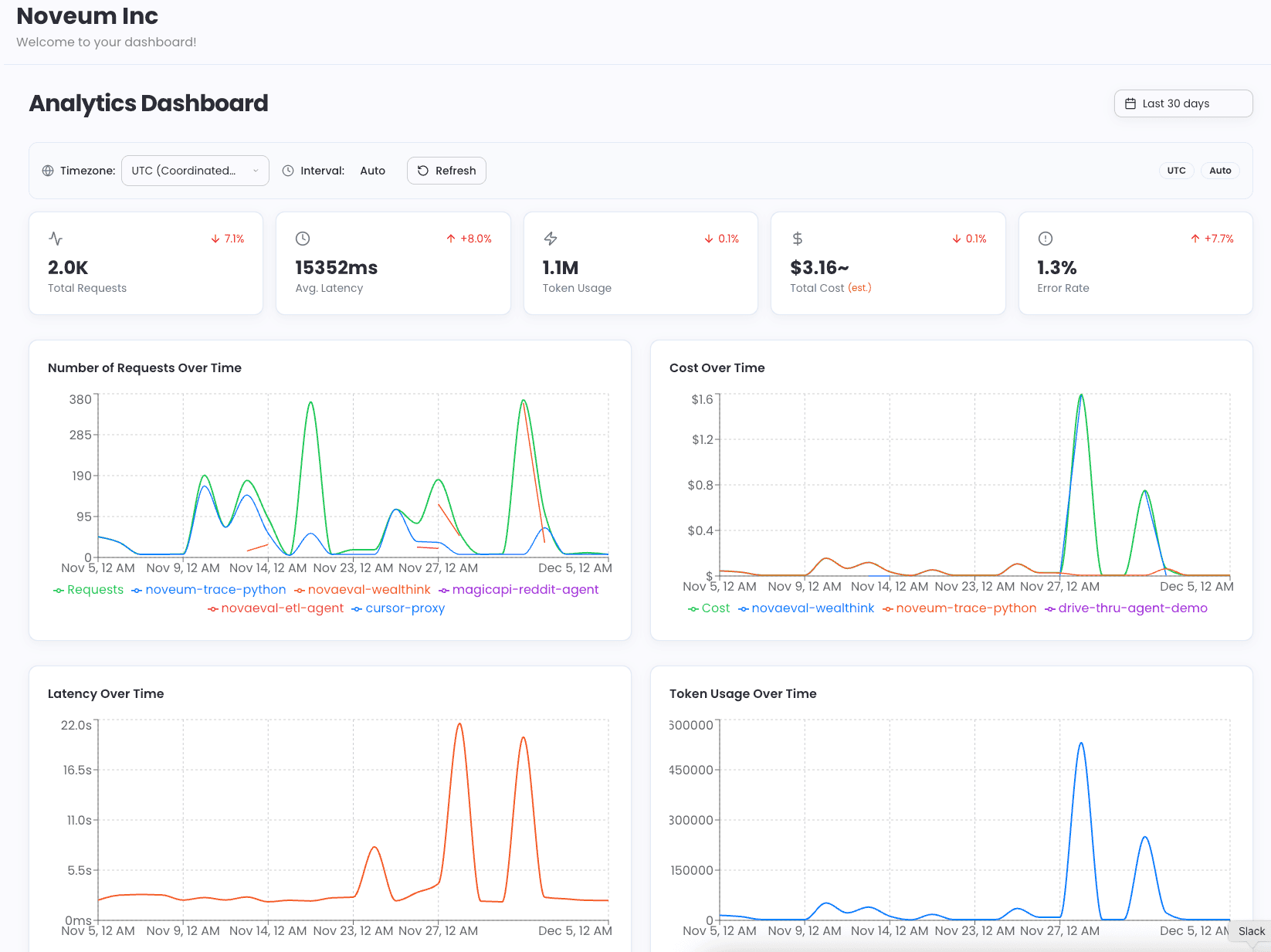

Track metrics that matter for AI: token usage, model costs, response quality, semantic accuracy, hallucination rates, and latency patterns.

Beyond Traditional APM

Traditional monitoring tracks requests. LLM observability tracks meaning, quality, and the reasoning behind every AI decision.

Traditional APM vs LLM Observability

| Metric | Traditional APM | LLM Observability |

|---|---|---|

| Token Usage Tracking | ||

| Cost Attribution | ||

| Response Quality Scoring | ||

| Latency Monitoring | ||

| Error & Failure Analysis | AI Evals |

Purpose-Built for the AI Era

Our three-pillar approach provides complete observability for any LLM application—from simple chatbots to complex multi-agent systems.

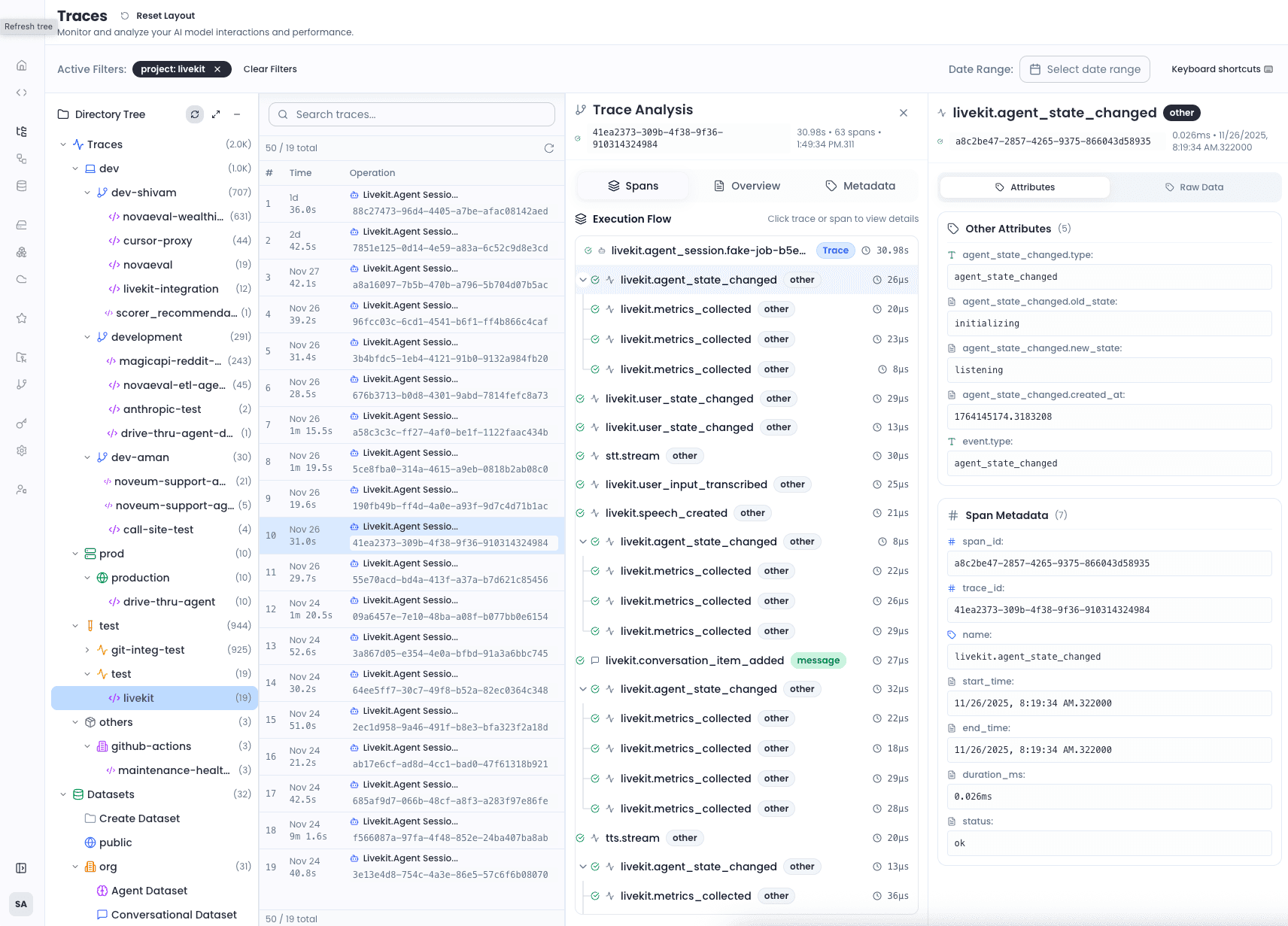

Tracing

Capture every LLM call, RAG retrieval, and agent decision with hierarchical traces that show the complete picture.

- Full request/response capture

- Hierarchical span visualization

- Token and cost attribution

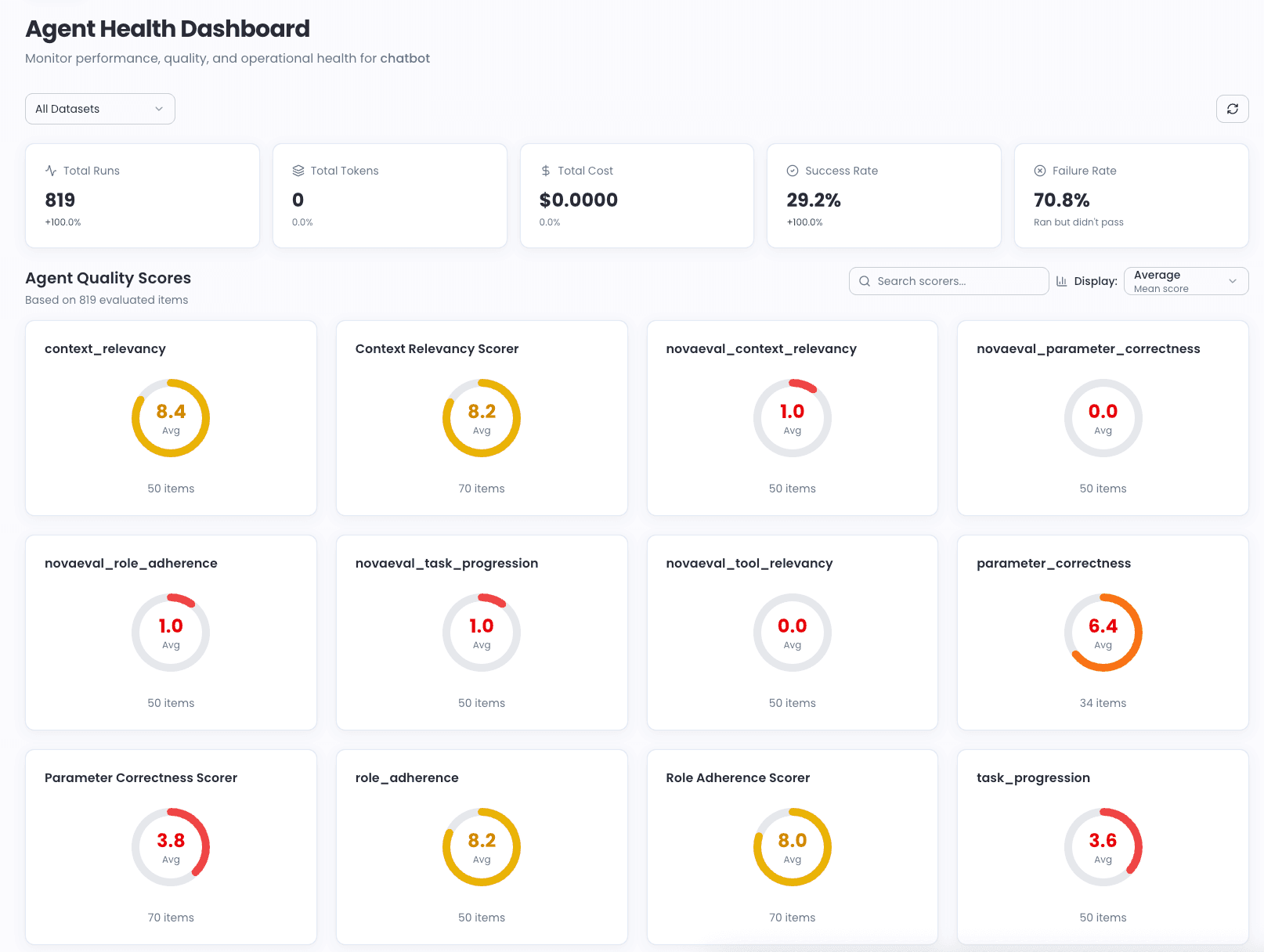

Evaluation

Automatically score every AI interaction with 73+ evaluation metrics including accuracy, safety, and custom business rules.

- 73+ built-in scorers

- Custom evaluation rules

- Real-time quality monitoring

AutoFix

NovaPilot analyzes your data and automatically generates fix recommendations—prompt improvements, model changes, and optimizations.

- AI-powered recommendations

- Prompt optimization suggestions

- Model upgrade analysis

Project-Centric Architecture

Organize your AI applications with a clear hierarchy that scales from development to production.

Project Organization

Group related AI workloads under projects with isolated metrics and access controls.

Environment Management

Separate dev, staging, and production with environment-aware dashboards and alerts.

Real-time Processing

Sub-second trace ingestion with immediate availability for monitoring and analysis.

Everything You Need for LLM Observability

From tracing to evaluation to automated fixes, Noveum provides the complete toolkit for AI operations.

Complete Tracing

Capture every interaction in your LLM pipeline with hierarchical traces that reveal the full story of each request.

- LLM calls with full prompts and responses

- RAG pipeline steps with retrieved documents

- Agent workflows and tool executions

- Custom spans for any operation

Dashboard Analysis

Real-time dashboards with AI-specific metrics that give you instant insights into your production LLM applications.

- Request volume and latency trends

- Model distribution and usage patterns

- Error rates and failure analysis

- Custom metric visualization

Cost Attribution

Understand exactly where your LLM budget goes with granular cost tracking across projects, models, and requests.

- Per-request cost calculation

- Model-level cost comparison

- Project and team cost allocation

- Budget alerts and forecasting

Quality Scoring with NovaEval

Automatically evaluate every LLM response with our comprehensive scorer library—no manual review required.

- 73+ evaluation scorers

- Accuracy and relevance metrics

- Safety and bias detection

- Custom business rule evaluation

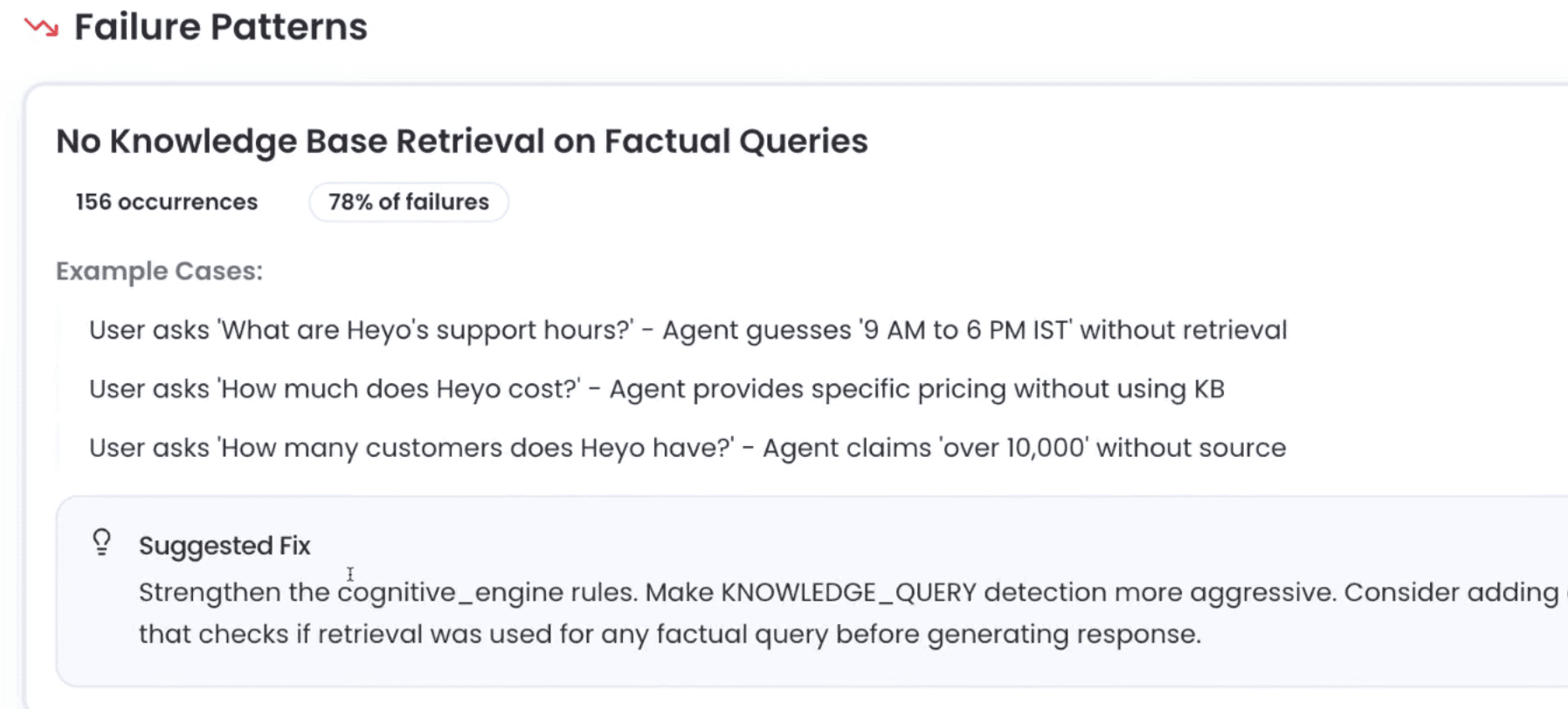

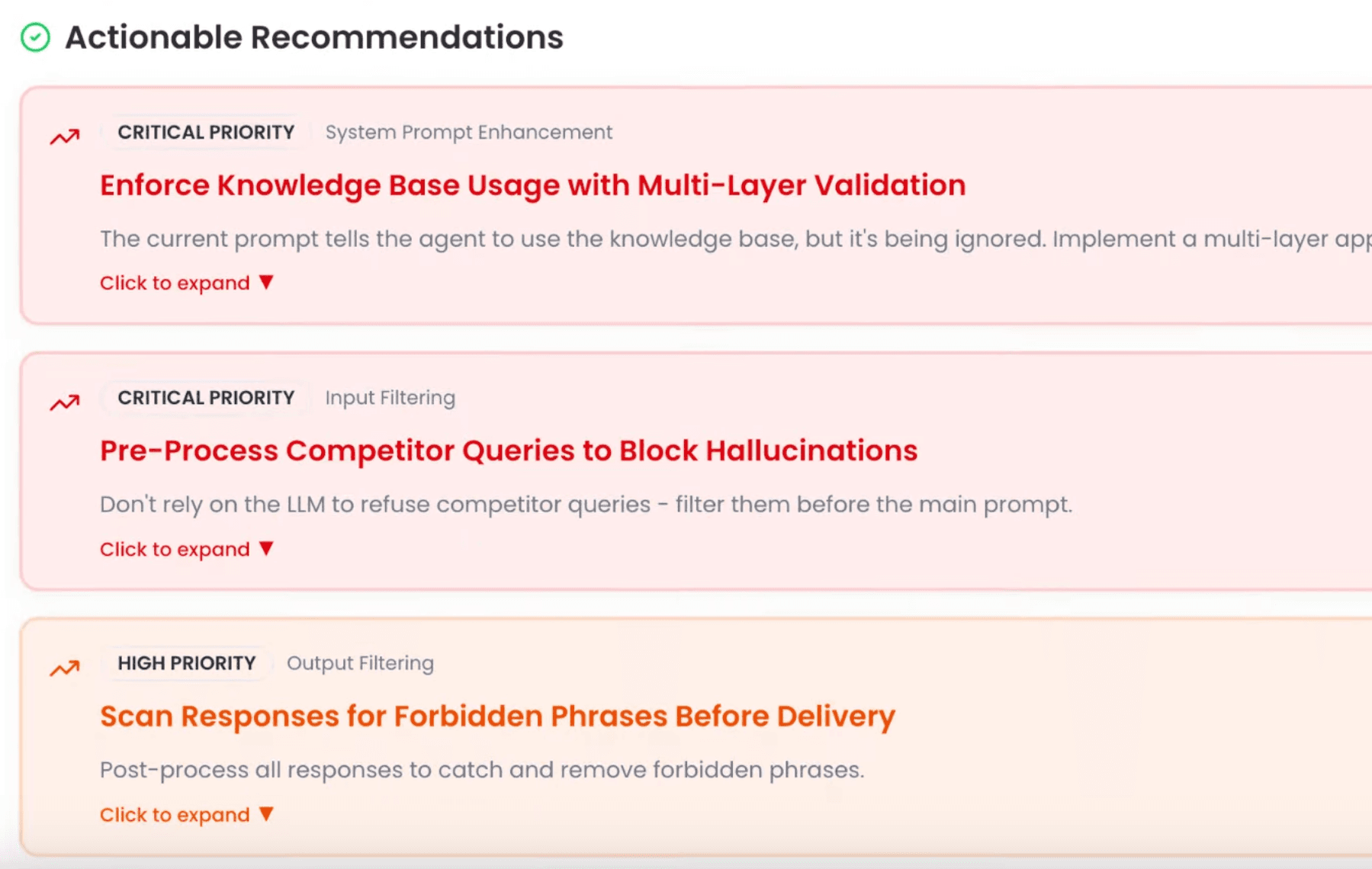

AutoFix with NovaPilot

Our AI engineer analyzes your data and automatically generates actionable fix recommendations.

- Prompt optimization suggestions

- Model upgrade recommendations

- Configuration improvements

- Automated fix validation

Works With Your AI Stack

Native integrations with all major frameworks—plus OpenTelemetry for custom implementations.

LangChain

First-class LangChain integration with automatic chain and agent tracing.

LlamaIndex

Complete LlamaIndex support for RAG pipelines and document processing.

CrewAI

Multi-agent workflow monitoring with crew and task-level visibility.

AutoGen

Microsoft AutoGen integration for conversational agent systems.

LangGraph

Graph-based agent workflows with state and transition tracking.

OpenTelemetry

Standard OTEL support for any custom AI implementation.

All Major AI Providers Supported

Automatic cost and token tracking for every provider.

Built for Enterprise Scale

Security, compliance, and collaboration features that meet enterprise requirements.

Multi-Tenant Architecture

Complete data isolation between organizations with granular access controls.

- Organization-level isolation

- Role-based access control

Multi-Region Deployment

Deploy in any region to meet data residency requirements and reduce latency.

- Global edge ingestion

- Regional data storage

Advanced Security

Enterprise-grade security with encryption, SSO, and audit capabilities.

- End-to-end encryption

- SSO and SAML support

Compliance & Audit

Comprehensive audit logs and compliance features for regulated industries.

- Complete audit trails

- Data retention policies

Team Collaboration

Built-in collaboration features for AI teams of any size.

- Shared dashboards

- Annotation and commenting

Custom Metrics

Define and track business-specific metrics tailored to your use case.

- Custom scorer creation

- Business KPI tracking

Get LLM Observability in Minutes

Add comprehensive observability to your LLM applications with just a few lines of code.

$ pip install noveum-traceimport os

import noveum_trace

from noveum_trace import NoveumTraceCallbackHandler

# Initialize Noveum Trace

noveum_trace.init(

api_key=os.getenv("NOVEUM_API_KEY"),

project="my-llm-app",

environment="development"

)

# Create callback handler

callback_handler = NoveumTraceCallbackHandler()from langchain_openai import ChatOpenAI

# Add callback to your LLM

llm = ChatOpenAI(

model="gpt-4",

callbacks=[callback_handler]

)

# All LLM calls are now automatically traced!

response = llm.invoke("What is the capital of France?")Frequently Asked Questions

Everything you need to know about LLM Observability with Noveum.ai.

Explore more solutions