Noveum.ai vs Arize & Braintrust

A detailed, practical reference comparing evaluation philosophies, data needs, integrations, outputs, and recommended workflows.

Useful for product teams, engineering leads, reliability engineers, and investors evaluating LLM & agent observability solutions.

"Did the agent follow the process correctly?"

"Was the output correct vs ground truth?"

"Does the answer match expected output?"

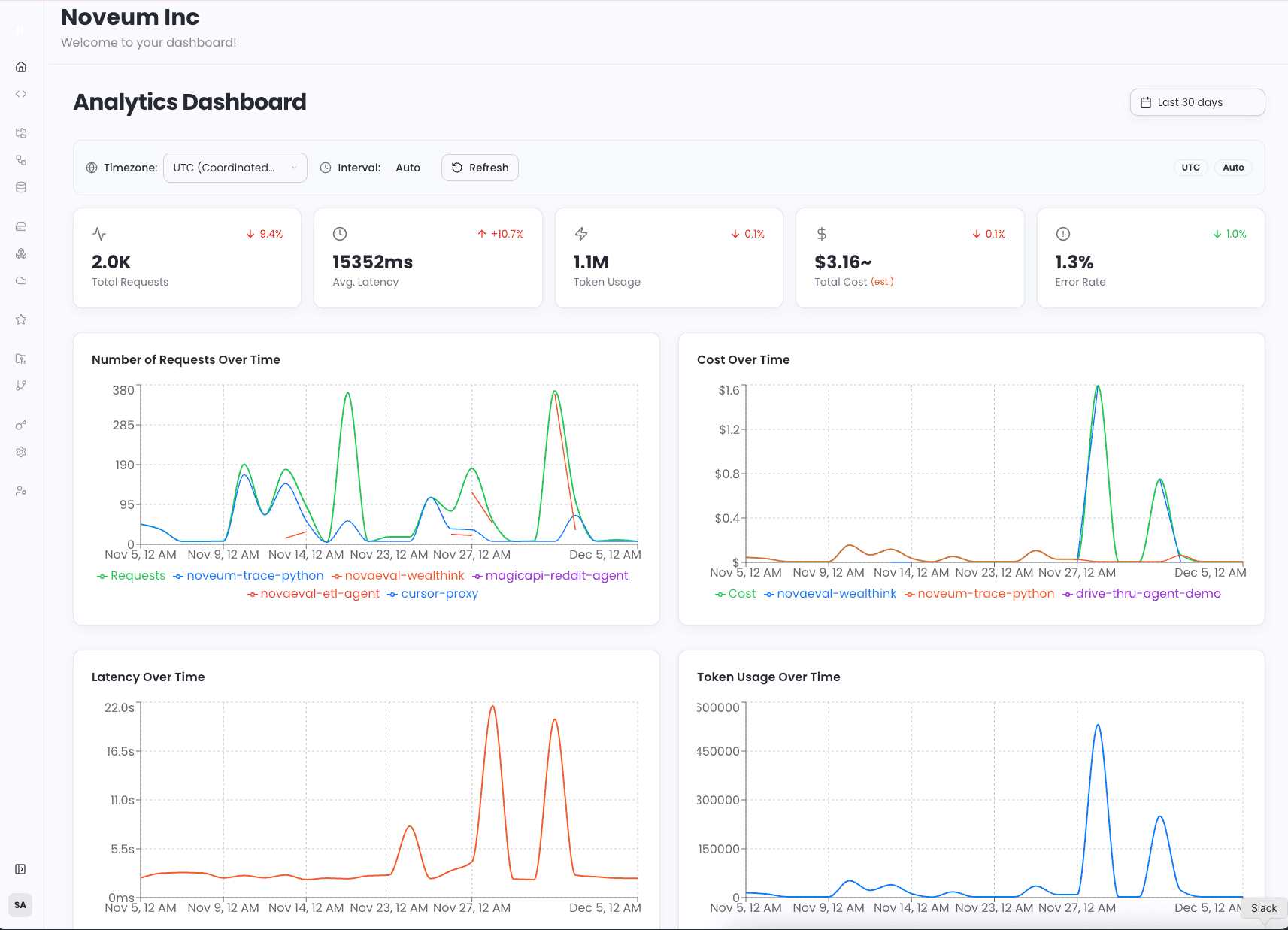

Noveum.ai Analytics Dashboard — Real-time agent monitoring & evaluation with 68+ built-in scorers

Understanding the Key Differences

Each platform excels in different areas. Understanding these differences will help you choose the right solution for your specific needs.

Noveum.ai

Full-stack LLM & agent evaluation + compliance platform built around trace-level understanding.

Primary Focus:

Evaluates how and why an agent behaved the way it did

- 68+ built-in scorers across 13 categories

- No ground truth required for most evaluations

- Complete execution traces with tool calls

- LLM-as-judge scoring for quality detection

- Agent behavior, RAG, hallucination, safety

Arize

Best suited for traditional Machine Learning and statistical monitoring.

Primary Focus:

Measures final answer correctness against ground truth

- Feature drift detection (PSI)

- Embedding-based monitoring

- Classical ML governance

- Statistical model monitoring

- Model comparison across versions

Braintrust

Best suited for human annotation, gold-label creation, and training-data generation.

Primary Focus:

Measures final answer correctness against ground truth

- Large-scale human annotation workflows

- Reviewer management and QA

- Dataset creation for audits

- Training data generation

- Input + expected pairs evaluation

One-Line Differentiator

"Did the agent follow the process and use the system/tools/context correctly?"

"Was the final answer correct compared to a ground truth?"

This fundamental difference shapes everything else: data requirements, evaluation methods, integration complexity, and ideal use cases.

High-Level Comparison Table

Noveum currently provides 68+ built-in scorers across 13 categories, including Agent, Conversational, RAG, Safety, Hallucination, Quality, and more.

| Dimension | Noveum.ai | Arize | Braintrust |

|---|---|---|---|

| Primary focus | Agent trajectory, trace correctness, behavioral checks | Model output quality, observability, dataset-driven evals | Dataset creation, annotation, expected-output based evals |

| Ground truth required | No (not for most checks) | Often yes for accuracy metrics | Yes — input + expected pairs are central |

| Uses production traces? | Yes — core data source | Yes — but needs labeling for GT metrics | Yes — can generate datasets but must add expected values |

| Hallucination detection | Structural detection (context mismatch, fabricated outputs) | Possible via LLM-judge or human labels | Human annotation required |

| Typical metrics | Prompt adherence, tool usage correctness, context leakage, step validity, drift | Accuracy, precision/recall, calibration, ROUGE/BLEU proxies, embeddings similarity | Accuracy, annotator consensus, dataset coverage |

| Best for | Agent debugging, reliability engineering, production monitoring | Model benchmarking, drift detection, LLM QA | Curating labeled test sets and human-in-the-loop evals |

| Integration complexity | SDK + trace schema + agent instrumentation | SDK + traces + annotation pipelines | Dataset upload + annotation UI / APIs |

Core Concepts Explained

Before comparing platforms, it's essential to understand these fundamental concepts that differentiate evaluation approaches.

Trajectory vs. Output

Trajectory

The ordered sequence of events the agent performs: system prompt, tool calls (with arguments), tool responses, retrievals, intermediate reasoning steps, and final answer.

Evaluating trajectory checks HOW the model arrived at an answer.

Output

Final text/structured response. Output evaluation checks WHAT the model answered against a reference.

Noveum inspects trajectory. Arize/Braintrust are usually focused on output.

Ground Truth and When It Matters

When Ground Truth is Essential

- Measuring correctness or computing traditional metrics

- Accuracy, exact-match, BLEU/Rouge evaluations

- Braintrust and Arize's ground-truth-based workflows

When Ground Truth is Optional

- Structural or behavioral failures

- Calling the wrong tool

- Using context not supplied

- Fabricating numbers not present in data

These can be detected with robust trace analysis alone (Noveum's approach).

LLM-as-Judge: A Middle Path

Arize & Others

Support LLM-as-Judge: use an LLM to score outputs with heuristics rather than fixed expected values. This reduces manual labeling but introduces judge noise and calibration needs.

Noveum's Approach

Checks are less subjective: they rely on deterministic schemas, tool contracts, and trace invariants, which are easier to operationalize for agent correctness.

Detailed Differences by Category

Explore the specific differences across key dimensions to understand which platform best fits your needs.

Data & Schema

Noveum

- Trace events (system prompt, user input, each agent step, tool call events, tool responses, retrieved docs, embeddings)

- Metadata (request id, timestamps, user id), agent config

- Ordered events with typed tool-call records

- Source of truth for retrievals, tokenized contexts

- No required expected_answer column for many failure modes

Arize

- Model inputs and outputs, optional ground truth labels

- Scoring metadata (confidence, probabilities), embeddings, features

- Input/output pairs, label columns for ground-truth metrics

- Timestamps for drift analysis

Braintrust

- Dataset rows with input, expected, plus optional annotation fields

- Tags, notes, annotator id

- Canonical dataset shape enabling human annotation

- Consensus and dataset export

Annotation Workflows

Noveum

- Minimal human annotation required

- Human review used for escalations or one-off signals

- Focus on automating detection via rule-based or trace-aware heuristics

Arize

- Supports human-labeled datasets for ground truth

- Human review to create high-quality test sets for calibration

Braintrust

- Built for human annotation at scale

- Tools to crowdsource or manage annotators

- Dataset versioning and quality control

Hallucination Detection

Noveum

- Compare statements/claims against retrieved context and tool outputs in trace

- Detect unsupported claims or invented tool outputs

- Flag context leakage and out-of-context assertions

Arize

- Human labeling of hallucinations

- LLM-as-Judge prompts that assess hallucination likelihood

- Requires careful calibration

Braintrust

- Rely on annotators to mark hallucinated content

- Produces human-grounded labels

Metrics & Alerts

Noveum

- Alerts on structural failures (tool-misuse rate, context-mismatch rate, prompt-violation rate)

- Trending of failure modes

- Per-agent scenario scoring

Arize

- Quantitative metrics (accuracy, F1, calibration curves, similarity scores)

- Drift detection, feature attribution

- Per-model comparisons

Braintrust

- Dataset quality metrics (annotator agreement, coverage)

- Evaluation run metrics (pass/fail vs expected answers)

Use Cases

Noveum

- Production agent monitoring

- Compliance checking for tool usage

- Debugging complex agent orchestration

- Regression detection in agent reasoning flows

- Live traffic checks without labeling burden

Arize

- Model comparison and benchmarking

- Long-term metric tracking

- Experiments comparing model versions

- Supervised metric reporting to stakeholders

Braintrust

- Curated benchmark creation

- Dataset curation for supervised evaluation

- Human-in-the-loop labeling projects

Example Workflows

See how each platform is typically used in practice with these real-world workflow examples.

Production Monitoring with Noveum

No Ground Truth RequiredIngest Traces

Integrate noveum-trace SDK to capture structured trace events from your AI agents in production

Auto-Generate Datasets

Traces are automatically converted to dataset items using AI-generated ETL mapper code

Run Evals & Scorers

73+ scorers analyze each trace, identify errors, and provide detailed reasoning for every issue

NovaPilot Experiments

AI agent uses scores and reasoning to generate fix experiments, running them in simulated environments across generations

Auto-Apply Fixes

Final tested improvements (prompts, models, parameters) are delivered as a PR ready to merge

Benchmarking with Arize

Small Ground Truth SetCollect Traces

Gather representative traces from production

Create Labels

Sample a subset and create human-labeled expected answers

Upload Data

Upload input/output/expected to Arize

Run Reports

Run accuracy and calibration reports; use LLM-as-Judge to expand coverage

Model Selection

Use results to choose model/version for production

Creating a Braintrust Dataset

From LogsExport Logs

Export logs as CSV (input, model_output, metadata)

Add Expected Values

Use Braintrust UI/API to add expected values and start annotation rounds

Monitor Agreement

Monitor annotator agreement and finalize dataset for model evaluation

Integration Points & Engineering Effort

Understanding the integration requirements and ongoing effort needed for each platform.

Noveum.ai

Engineering Requirements

- Instrument agent to emit structured, ordered traces

- Best if tool calls and retrievals have typed schemas

Effort Level

Requires tracing and type instrumentation, but lower annotation pain

Deployment

Runs on live traffic; able to surface issues fast

Arize / Braintrust

Engineering Requirements

- Capture inputs/outputs reliably

- Establish annotation flow for ground truth

- Human + tooling + storage for labels

Effort Level

Annotation pipelines and human QA; higher costs for labeling

Deployment

Powerful for benchmarking and compliance reporting, but annotation overhead is a gating factor

Choosing the Right Tool

Use this framework to determine which platform is best for your specific needs.

If You run agentic systems that orchestrate tools

and care about "did the agent behave correctly"

If You need strict accuracy metrics against a known gold standard

(QA correctness, retrieval exactness, labeled tasks)

If You want both

continuous monitoring AND periodic benchmarks

Ready to Try Trace-Based Evaluation?

Start monitoring your agents with Noveum.ai today. No ground truth required, no manual labeling overhead.

Limitations & Trade-offs

No evaluation approach is universally sufficient. Understanding where each platform excels and falls short helps make the right choice.

Where Noveum.ai Can Have Limitations

Does not measure semantic correctness (when ground truth is required)

Noveum excels at detecting structural and provenance-based errors using complete trace context. It can surface partial correctness, degrees of completeness, and context-supported vs. unsupported claims. However, cases requiring external canonical answers (precise numeric solutions, legal statutes, regulated medical facts) may still need ground truth.

Weak for some canonical ground-truth needs

For tasks demanding a single authoritative ground truth (exact math solutions, regulatory compliance assertions matching statute text), organizations often still maintain a gold dataset for auditability.

Depends on trace quality

Noveum's strengths are proportional to trace fidelity. Well-instrumented traces (typed tool-call records, retrieval provenance, token-level context) enable rich scoring; poor traces reduce coverage.

Operational complexity for non-instrumented systems

If you cannot or will not instrument your agent to emit detailed traces/spans, Noveum's advantages are limited. This is why Noveum provides ETL pipelines to convert raw traces into clean, enriched dataset items.

Where Arize is a Better Fit

Traditional ML model monitoring

Arize is a strong fit for non-LLM / non-agent workloads: classical ML models, tabular models, time-series forecasting, recommendation systems, classification/regression pipelines.

Feature-level drift & statistical ML analytics

Arize excels at feature distribution drift, population stability index (PSI), embedding drift, and statistical monitoring over long time horizons for ML systems.

ML governance & compliance reporting

In organizations where ML governance frameworks already exist (model cards, dataset lineage, statistical audits), Arize fits naturally.

Note: Noveum is explicitly not designed for traditional ML and does not aim to replace Arize in this space.

Where Braintrust is a Better Fit

Human annotation at scale

Braintrust is optimized for large-scale human review workflows, reviewer management, and annotation QA. Noveum minimizes human labeling and is not an annotation platform.

Explicit gold-label creation for audits

When regulations or customers require explicitly human-verified labels stored as datasets, Braintrust is often required.

Training data generation

Braintrust-labeled datasets can be reused directly for fine-tuning or supervised learning workflows.

When Each Tool Wins

A quick reference guide to help you choose the right platform for each scenario.

| Scenario | Best Choice |

|---|---|

Agent misuse, tool errors, hallucinations, partial correctness | Noveum.ai |

Live production monitoring without labels | Noveum.ai |

Model comparison without ground truth | Noveum.ai |

LLM-as-Judge evaluations at scale | Noveum.ai |

Debugging complex agent flows | Noveum.ai |

Traditional ML (non-LLM) monitoring | Arize |

Human annotation & gold-label creation | Braintrust |

Publishing accuracy metrics with ground truth | Arize / Braintrust |

Human-reviewed correctness | Braintrust |

Important Clarifications

Model Comparison

Noveum is stronger than Arize/Braintrust for agent and LLM model comparison when ground truth is unavailable. You can run the same agent or prompt against GPT-4, Claude, Gemini, etc., and compare aggregate scores across 68+ scorers (hallucination, completeness, context fidelity, tool correctness, safety, bias, format, etc.) using identical traces and evaluation logic.

LLM-as-Judge at Scale

Noveum provides first-class LLM-as-judge scoring across its scorer library. It can identify hallucination, partial correctness, accuracy failures, and qualitative degradation directly from traces, without expected outputs. This is not an area where Arize is better.

Recommendations & Best Practices

Follow these proven strategies to get the most out of your evaluation approach.

Instrument Early

Structured traces (tool events + retrieval provenance) are the foundation for both behavioral checks and for creating labeled datasets later.

Start with Trajectory Checks

Catch structural issues cheaply with Noveum-style checks before investing heavily in labeling.

Maintain a Small GT Test Set

Keep a curated labeled set (50–500 examples) for calibration of any LLM-judge methods and for regression testing.

Use Hybrid Flows

Detect likely failures with trajectory checks, then send prioritized samples to human labelers (via Braintrust-style tools) to build GT selectively.

Version Datasets and Trace Schemas

Make it simple to reproduce evaluations across model changes.

Example Implementations

Example Alert Rules (Noveum Style)

If scenario required bank_api call but no tool event for bank_api appears → alert.

If final answer cites a doc id or snippet that wasn't present in retrieved docs → alert.

If answer includes user data not in current session context → alert.

If final_answer event occurs before required validation/step events complete → alert.

Example Evaluator Templates (Arize/LLM-Judge)

Accuracy Template

Prompt judge with input + model_output + expected; ask for pass/fail and confidence.

Hallucination Template

Prompt judge with input + model_output + retrievals; ask if any claims are unsupported.

Noveum ETL & Golden-Dataset Workflow

Traces → ETL → Enriched items (goldens): Noveum provides ETL pipelines that take raw traces/spans from production, normalize and enrich them (add retrieval provenance, tool-call typing, tokenized contexts, metadata), and produce clean dataset items. These items can act as goldens for targeted benchmarking, be exported for human annotation, or be used directly as high-quality test cases in automated eval pipelines.

Why this matters: It allows teams to get the best of both worlds: continuous, trace-based monitoring plus the ability to extract and maintain curated golden datasets for audits, regulatory needs, or external benchmark comparisons.

Frequently Asked Questions

Get answers to the most common questions about choosing between these platforms.

Yes — Noveum can triage and prioritize traces. Use high-confidence structural failure detections to seed a labeled dataset for human review or for Arize benchmarking.

Ready to Try Trace-Based Evaluation?

See how Noveum.ai can help you understand and improve your AI agents without the overhead of manual labeling.

Evaluating for your enterprise?

Get a personalized demo and see how Noveum.ai fits your specific use case.

Explore more resources