Integrating Evaluation into Your Agent Development Workflow: A Guide to Eval-Driven Development

Shashank Agarwal

12/26/2025

The Shift to Eval-Driven Development

In the world of traditional software development, Test-Driven Development (TDD) has long been a cornerstone of building reliable and maintainable code. However, when it comes to developing AI agents, a new paradigm is emerging: Eval-Driven Development (EDD) [1]. EDD is a development methodology where comprehensive evaluation suites are created in lockstep with agent capabilities. Instead of writing tests that assert specific outputs, you write "evals" that assess the quality and performance of your agent across a variety of dimensions.

This shift in mindset is crucial because AI agents are fundamentally different from traditional software. They are non-deterministic, their behavior can be difficult to predict, and they can fail in subtle and unexpected ways. By embracing EDD, you can move from a reactive, ad-hoc approach to evaluation to a proactive, systematic process that enables you to build more robust and trustworthy agents.

This guide will walk you through the principles of EDD and provide a practical workflow for integrating evaluation into your agent development process. We will also explore how a platform like Noveum.ai can help you implement EDD at scale.

The Benefits of Integrating Evaluation Early

Integrating evaluation into your development workflow from the very beginning offers a number of significant advantages:

- Catch Errors Early: By continuously evaluating your agent as you build it, you can catch errors and regressions early in the development cycle, when they are easier and less costly to fix.

- Improve Reliability: A systematic approach to evaluation helps you identify and address the root causes of agent failures, leading to more reliable and predictable behavior.

- Accelerate Development: While it may seem counterintuitive, investing in evaluation upfront can actually accelerate your development process. By catching errors early and providing clear signals for improvement, EDD can help you iterate faster and with more confidence.

- Build Trust: A rigorous evaluation process is essential for building trust in your AI agents, both internally with your team and externally with your users.

A Practical Workflow for Integrating Evaluation

Here is a step-by-step guide to integrating evaluation into your agent development workflow:

1. Define Your Evaluation Metrics and Benchmarks

The first step is to define what you want to measure. This will depend on the specific goals of your agent, but some common metrics include:

- Task Completion Rate: Does the agent successfully complete its assigned tasks?

- Accuracy: Is the agent's output correct?

- Hallucination Rate: Does the agent generate false or misleading information?

- Compliance: Does the agent adhere to its constraints and safety guidelines?

- Cost and Latency: How much does it cost to run the agent, and how long does it take to produce a response?

Once you have defined your metrics, you need to create a benchmark to measure them against. This could be a set of golden test cases, a human-annotated dataset, or a combination of both.

2. Create an Evaluation Dataset

Your evaluation dataset should be representative of the real-world inputs your agent will encounter. It should include a mix of common use cases, edge cases, and adversarial examples designed to test the limits of your agent's capabilities.

3. Automate the Evaluation Process

To make evaluation a seamless part of your development workflow, it's essential to automate the process as much as possible. This can be done by integrating your evaluation suite into your CI/CD pipeline, so that every code change is automatically evaluated against your benchmark.

4. Use Evaluation Results to Iterate and Improve

The final step is to use the results of your evaluations to iterate and improve your agent. This could involve fine-tuning your model, improving your prompts, or adding new tools to your agent's toolkit.

The Role of Continuous Evaluation

Evaluation doesn't stop once your agent is in production. In fact, it's just as important to continuously monitor your agent's performance in the real world. This is because:

- Data Drift: The distribution of real-world data can change over time, which can lead to a degradation in your agent's performance.

- New Failure Modes: As users interact with your agent in new and unexpected ways, you may discover new failure modes that you didn't anticipate during development.

By continuously evaluating your agent in production, you can proactively identify and address these issues before they impact your users.

How Noveum.ai Facilitates Eval-Driven Development

Noveum.ai is a powerful platform that is designed to help you implement an EDD workflow from start to finish. Here's how it can help:

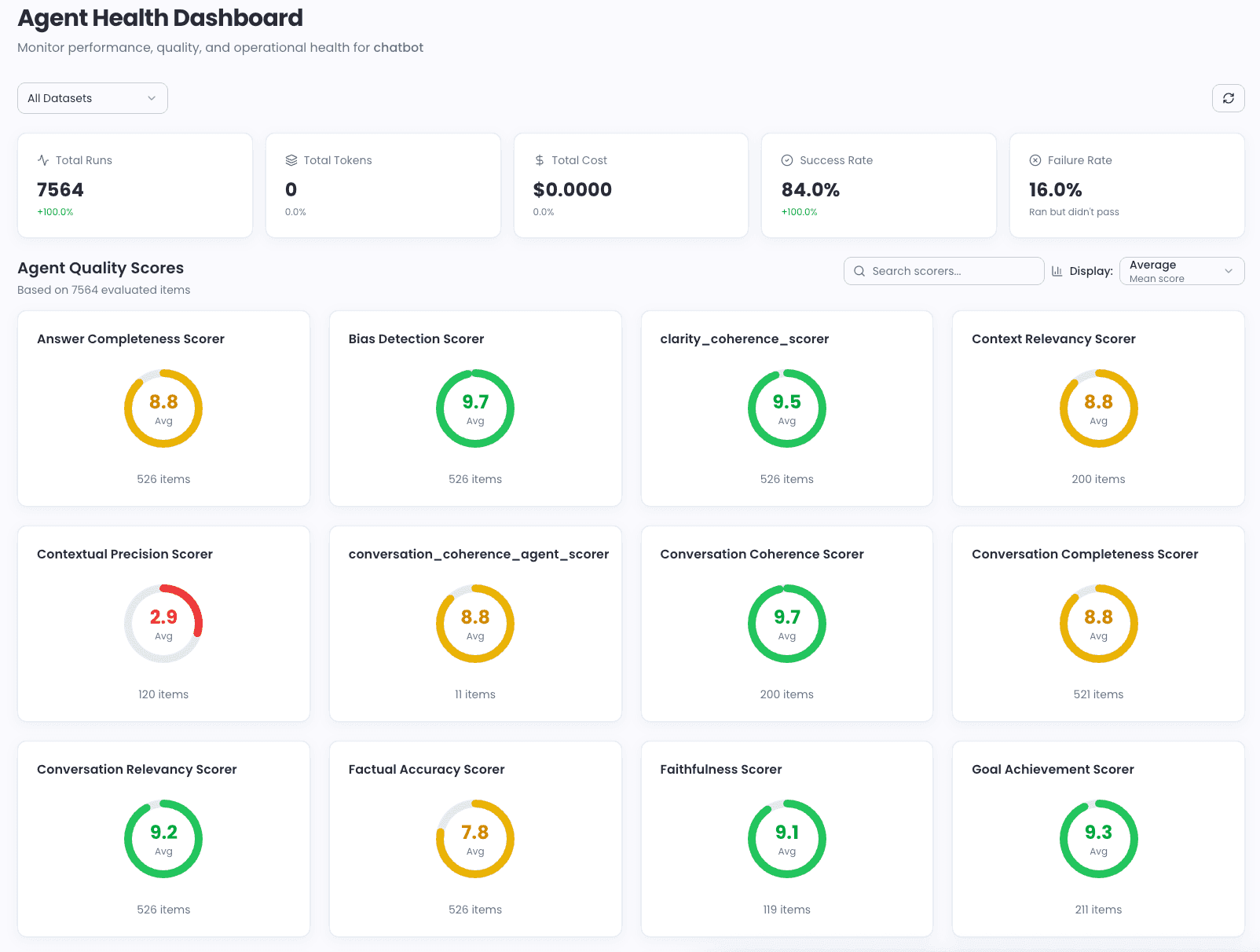

- Automated Evaluation: Noveum.ai automatically evaluates your agent across 73+ dimensions, so you don't have to spend time writing and maintaining your own evaluation suite.

- Root Cause Analysis: When your agent fails, Noveum.ai's NovaPilot feature uses AI to automatically identify the root cause of the failure and suggest concrete steps for remediation.

- Continuous Monitoring: Noveum.ai provides continuous monitoring of your agent in production, so you can be alerted to any performance degradation or new failure modes as they arise.

- System Prompt as Ground Truth: Noveum.ai uses your system prompt as the ground truth for evaluation, which eliminates the need for manual labeling and makes it easy to get started.

By leveraging the power of Noveum.ai, you can move from a manual, time-consuming evaluation process to an automated, data-driven approach that enables you to build better, more reliable AI agents faster.

Conclusion

Eval-Driven Development is a powerful new paradigm for building AI agents. By embracing EDD and integrating evaluation into your development workflow from the very beginning, you can build more robust, reliable, and trustworthy agents. With a platform like Noveum.ai, you can implement EDD at scale and accelerate your journey to production.

References

[1] LinkedIn: Evaluations-Driven Development: A New Paradigm for Building AI

Get Early Access to Noveum.ai Platform

Join the select group of AI teams optimizing their models with our data-driven platform. We're onboarding users in limited batches to ensure a premium experience.