🚀 Noveum.ai Overview

A unified observability, evaluation, and autofix platform for modern AI applications — including agents, LLMs, and RAG systems.

Welcome to Noveum.ai—the end to end observability and autofix platform built specifically for AI applications. Whether you're building LLM-powered chatbots, RAG systems, multi-agent workflows, or any AI-driven application, Noveum provides the insights you need to understand, Propose the fixes and autofix it for you.

Platform Overview

Click on images to view fullscreen

🔄 How Noveum Works - Step-by-Step Process

Install & Setup

Add noveum-trace SDK to your project

Add Tracing

Wrap your Agent calls to extract the traces

Monitor & Analyze

View traces in real-time over dashboard

Insights & AutoFix

Explore scores, Analyze Reasoning and Autofix

🚀 Core Capabilities

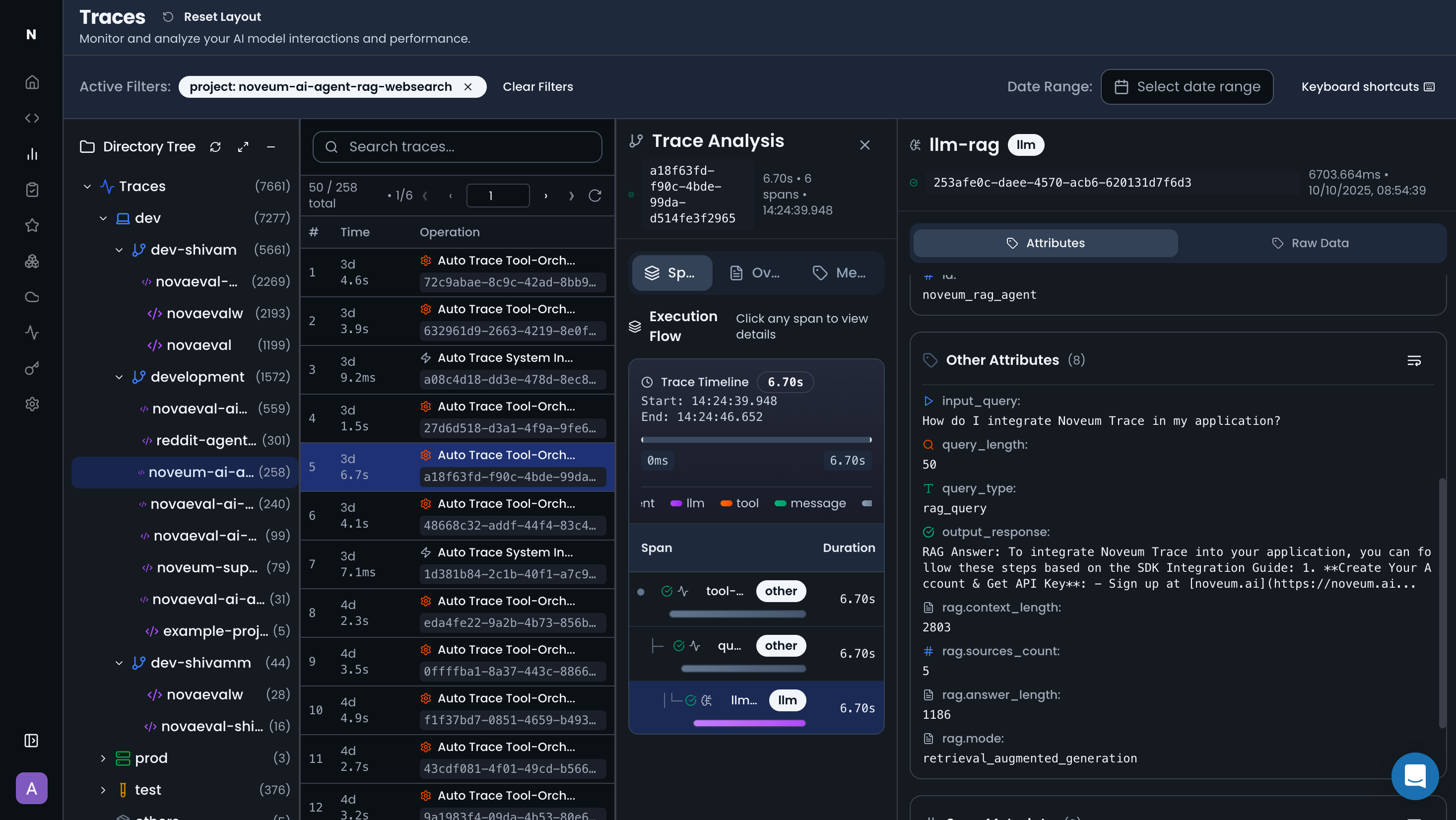

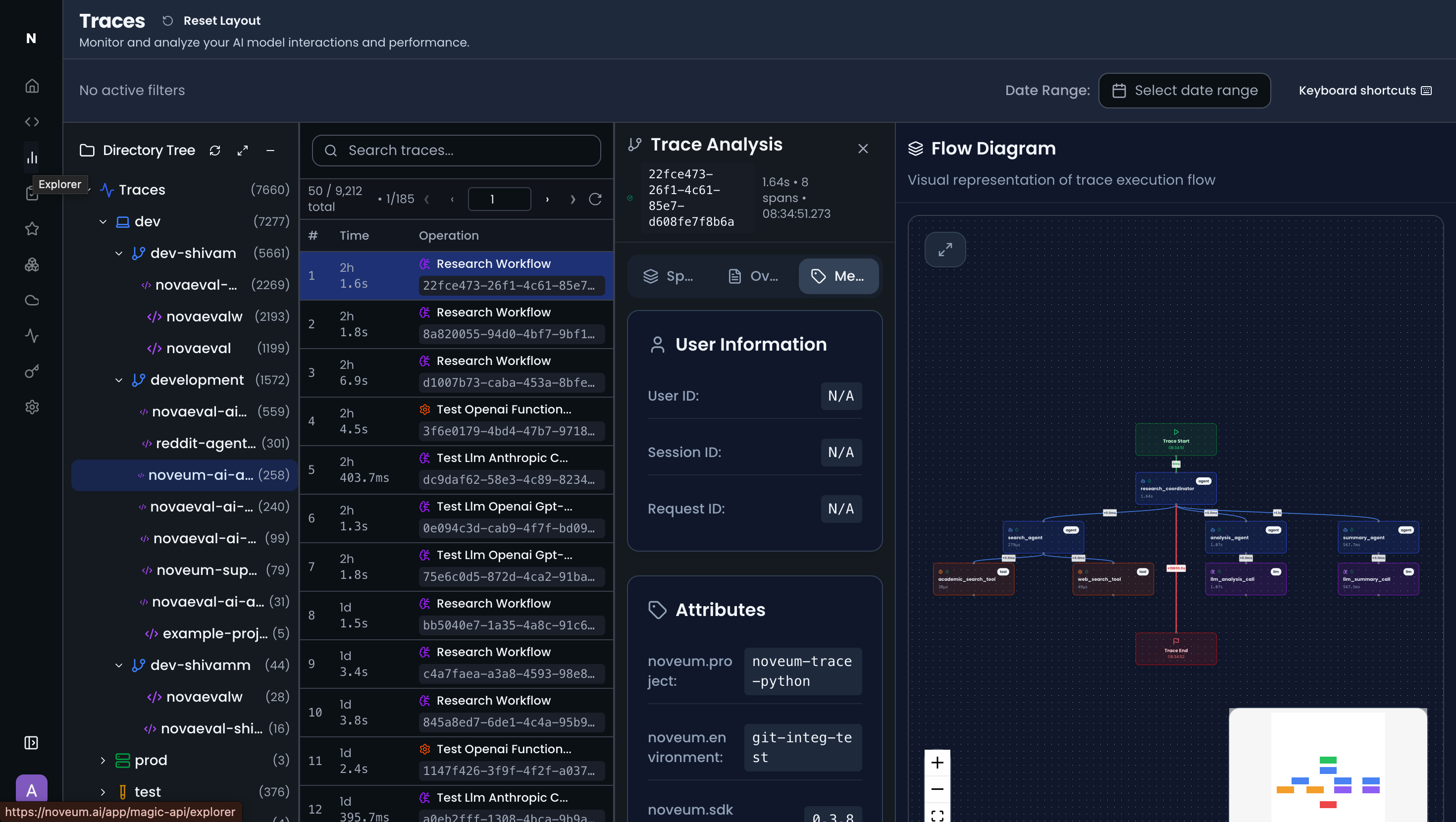

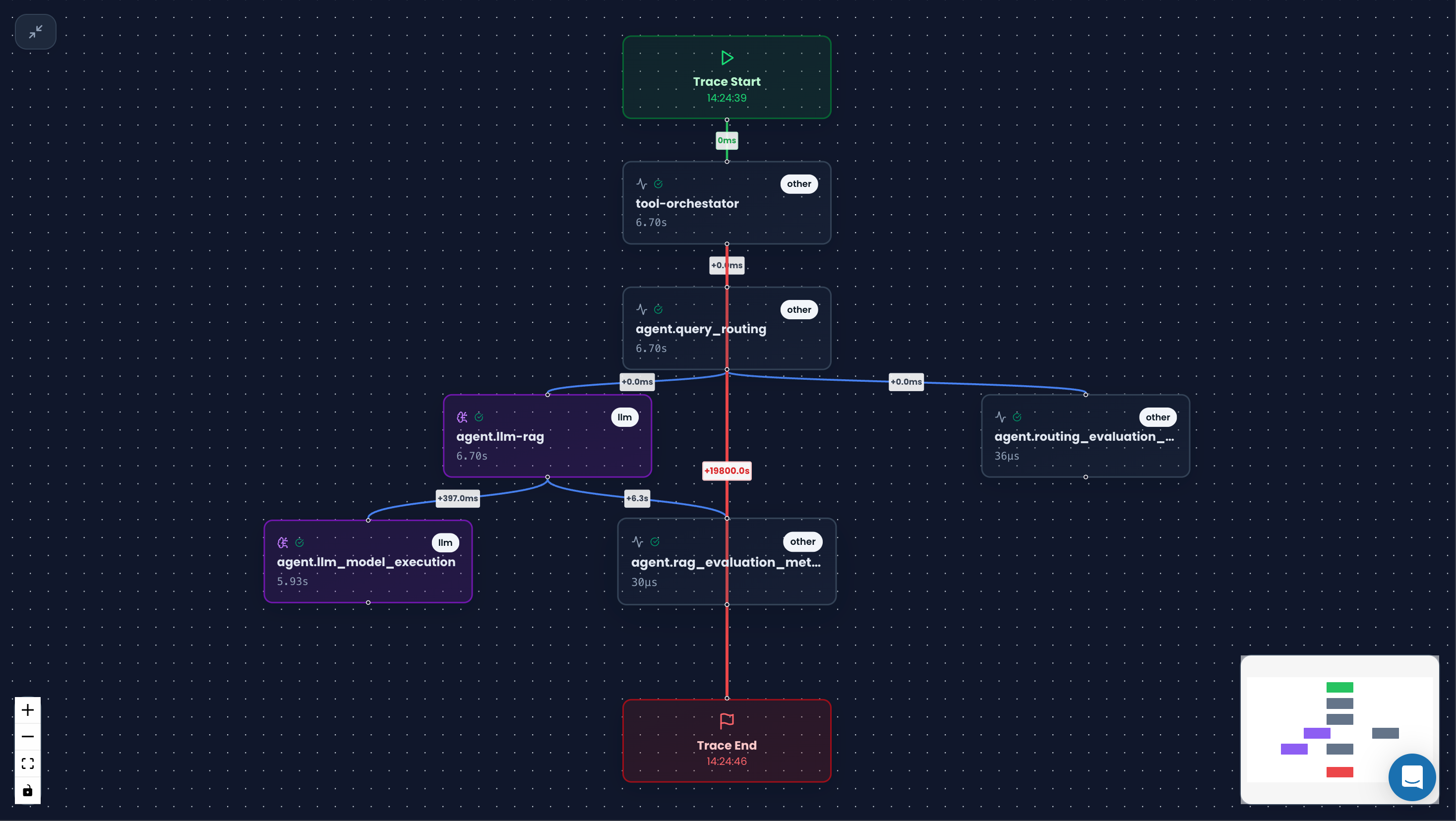

🔍 Complete AI Tracing

- Complete trace of all AI agent calls

- Hierarchical trace visualization with spans

- Python SDK for seamless integration

- Minimal code changes required

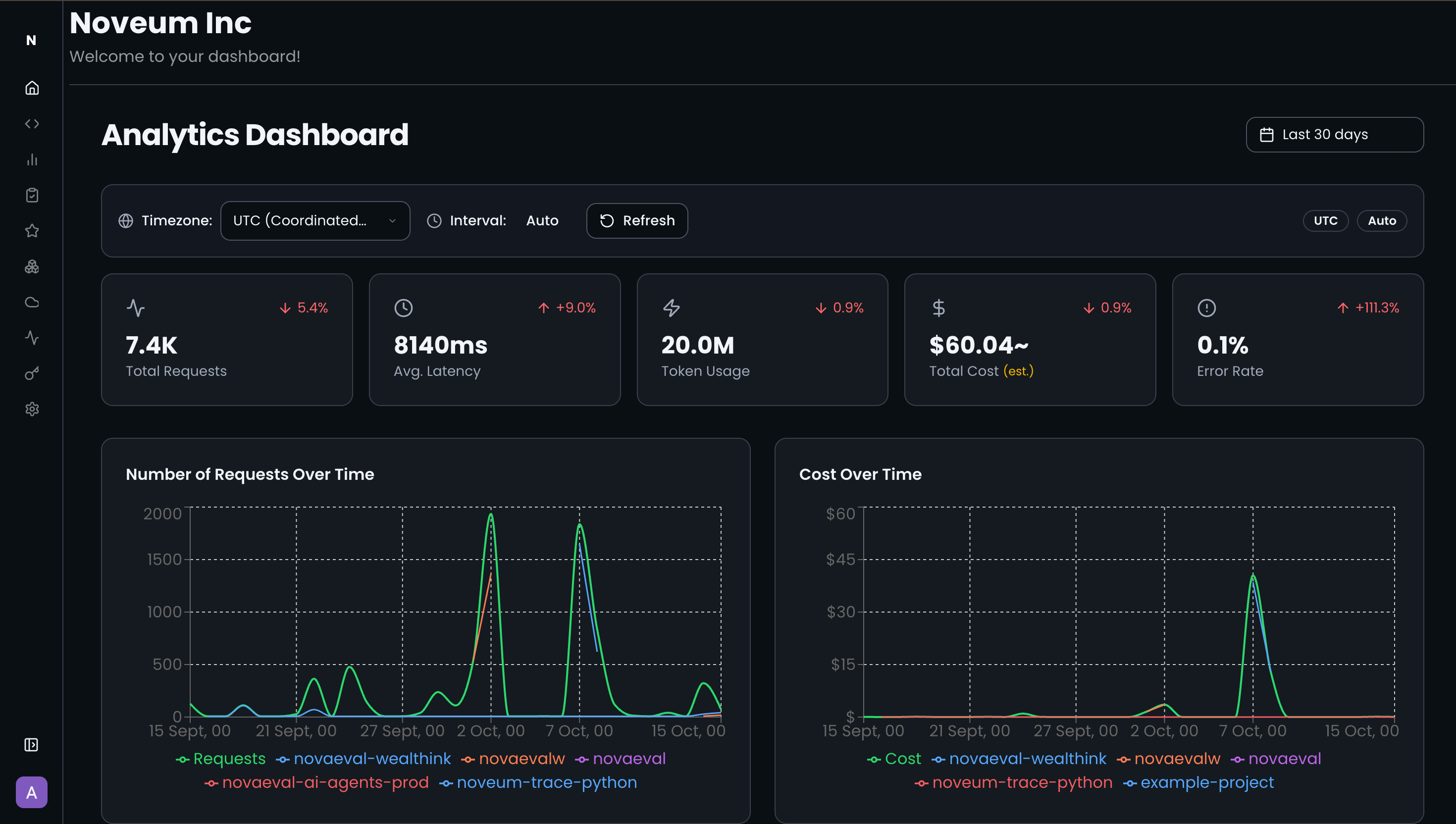

📊 Dashboard Analysis & Scoring

- Analyze AI calls with detailed insights

- Score performance with reasoning

- Interactive trace visualization

- Real-time monitoring and metrics

🛠️ Solution Proposal & Autofix

- Intelligent solution recommendations

- Automated fix suggestions

- Performance optimization guidance

- Proactive issue resolution

Why AI Applications Need Specialized Observability

Traditional monitoring tools fall short when it comes to AI applications because they don't understand:

- AI-Specific Metrics: Token usage, model costs, prompt effectiveness

- Complex Workflows: Multi-step RAG pipelines, agent interactions, tool usage

- Context Flow: How data moves through embeddings, retrievals, and generations

- Cost Attribution: Which operations drive your AI spending

- Quality Metrics: Beyond latency - understanding output quality and relevance

- Custom Evals: Scorers tailored to your specific use case and business requirements

Noveum.ai bridges this gap with purpose-built observability for the AI era.

🔄 Complete Workflow - How it happens

Step 1: SDK Integration

Add tracing to your code with minimal changes using context managers:

Step 2: Trace Collection - Noveum-Trace

Comprehensive end-to-end tracing for your AI application

- LLM Operations: Model calls, token usage, costs

- RAG Pipelines: Document retrieval, embeddings, context assembly

- Agent Workflows: Multi-agent interactions, tool usage, decision trees

- Tool Calls: Function calls, tool executions, parameter passing

- API Calls: External service requests, responses, status codes

- DB Calls: Database queries, transactions, connection pooling

- Custom Operations: Business logic, external APIs, data processing

Step 3: Platform Visualization

View and analyze traces in the Noveum dashboard:

- Hierarchical Trace Views: Complete workflow visualization

- Performance Metrics: Latency, throughput, error rates

- Cost Analysis: Token usage, provider costs, optimization opportunities

- Real-time Monitoring: Live dashboards and intelligent alerting

Step 4: Background ETL Processing

In the background, automated ETL jobs continuously process your traces:

- Dataset Creation: Convert traces to evaluation datasets automatically

- Model Evaluation: Run systematic evaluations with NovaEval scorers

- Score Generation: Calculate performance metrics and quality scores

- Dashboard Updates: Push results to real-time dashboards for analysis

Step 5: Score Visualization & Reasoning - NovaEval

Access detailed insights through the Noveum Dashboard:

- Call-by-Call Analysis: View scores and reasoning for every individual AI call

- Performance Breakdown: Understand why certain calls performed better or worse

- Reasoning Transparency: See the evaluation logic behind each score

- Interactive Exploration: Drill down into specific traces and spans for detailed analysis

Example:

Step 6: Intelligent Autofix Suggestions - NovaPilot

NovaPilot analyzes low-scoring traces and provides actionable recommendations:

- Pattern Recognition: Identifies common issues across failed or low-scoring traces

- Root Cause Analysis: Validates evaluation reasoning and pinpoints specific problems

- Suggested Fixes: Generates concrete improvement recommendations based on detected patterns

- Guardrail Examples: Proposes system prompt enhancements, such as adding safety guardrails or clarifying instructions

- Contextual Guidance: Provides implementation examples tailored to your specific use case

Example: If NovaPilot detects hallucinations in responses, it might suggest:

Noveum Products

Noveum Trace

Trace all your AI calls

Learn More →

NovaEval

Evaluate with comprehensive scorer library

Learn More →

NovaPilot

Automated analysis & fixes

Learn More →

Noveum SDK

Python SDK with comprehensive API

Learn More →🎯 Complete End-to-End AI Monitoring

Noveum traces everything, provides powerful dashboards for visualization, and offers comprehensive scoring and evaluation - delivering complete end-to-end monitoring for your AI applications.

🚀 Quick Start Actions

Get Started

5-minute setup guide to start tracing your AI applications

Quick Setup →View Dashboard

Explore the Noveum platform and see your traces in action

Launch Dashboard →Try Examples

See complete working examples of AI tracing in action

View Examples →🤝 Community & Support

- 💬 Discord Community: Join our Discord

- 📧 Email Support: support@noveum.ai

- 🐛 Bug Reports: GitHub Issues

- 📖 Knowledge Base: Help Center

Ready to get started? Head to our SDK Integration Guide to begin tracing your AI applications in under 5 minutes!

Built by developers, for developers. Noveum.ai understands that AI applications are different, and we've designed our platform from the ground up to meet their unique observability needs.

Get Early Access to Noveum.ai Platform

Be the first one to get notified when we open Noveum Platform to more users. All users get access to Observability suite for free, early users get free eval jobs and premium support for the first year.